Efficient PDF Text Extraction with Vision Language Models —— Why olmOCR Changes the Game

MISTRAL OCR Team

March 3, 2025

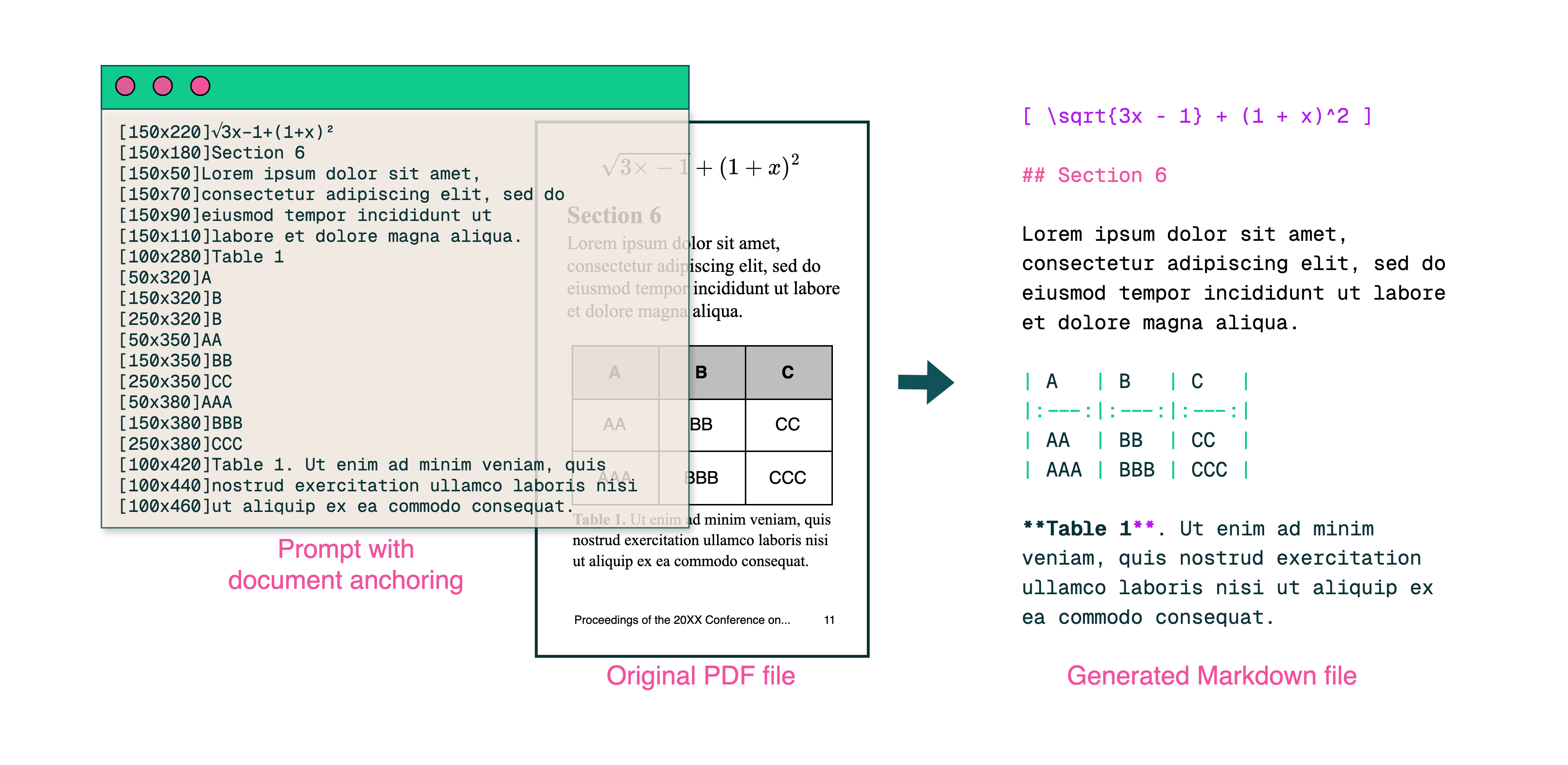

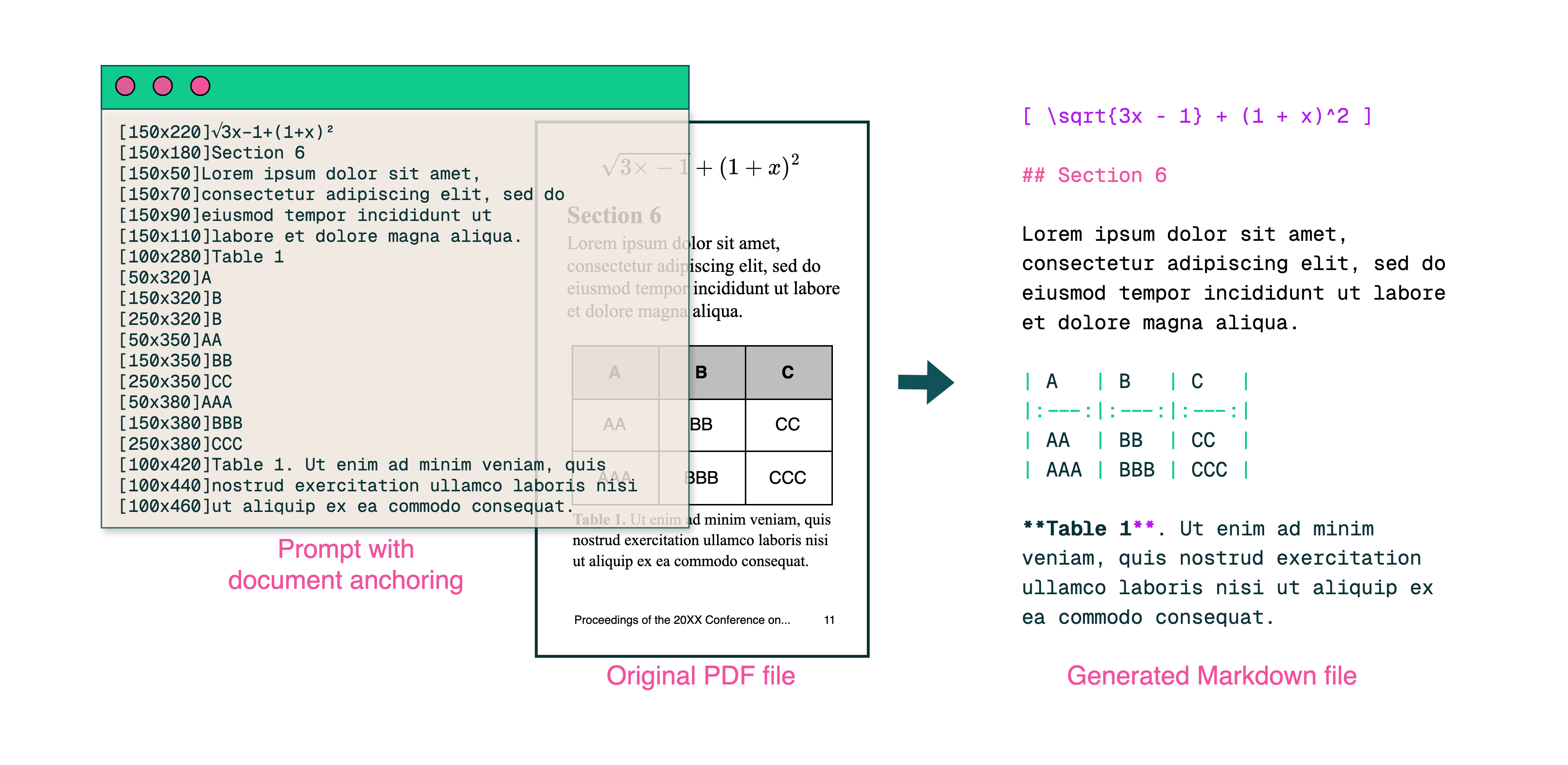

Caption: olmOCR's end-to-end pipeline converts messy PDFs into structured Markdown text at 1/32nd the cost of GPT-4o.

The Hidden Challenge of PDFs: Why Plain Text Matters

Language models thrive on clean text—but PDFs are the ultimate frenemy. Designed for printing, not parsing, they jumble text positions, bury tables in binary code, and turn equations into visual puzzles. Traditional OCR tools? They often miss formatting, struggle with multi-column layouts, or charge a fortune.

Enter olmOCR: an open-source toolkit that combines vision-language models (VLMs) with smart engineering to crack the PDF code. Let’s break down why developers and researchers are buzzing about it.

5 Reasons olmOCR Outshines Other Tools

-

Cost Efficiency That’s Hard to Ignore

Process 1 million pages for $190—that’s 32x cheaper than GPT-4o batch APIs. How? By fine-tuning on 250K diverse pages (academic papers, legal docs, even handwritten letters) and optimizing inference with SGLang/vLLM. -

Markdown Magic

No more regex nightmares. olmOCR outputs clean Markdown with:- Preserved equations (

E=mc²) - Tables that stay tables

- Correct reading order for complex layouts

- Preserved equations (

-

Batteries-Included Pipeline

python -m olmocr.pipeline ./workspace --pdfs your_file.pdfScale from 1 to 100+ GPUs seamlessly. Built-in error handling tackles common PDF gremlins like metadata corruption.

-

Open Source, Zero Black Boxes

Weights, training data (yes, all 250K pages!), and code are public. Built on Qwen2-VL-7B-Instruct—no proprietary dependencies. -

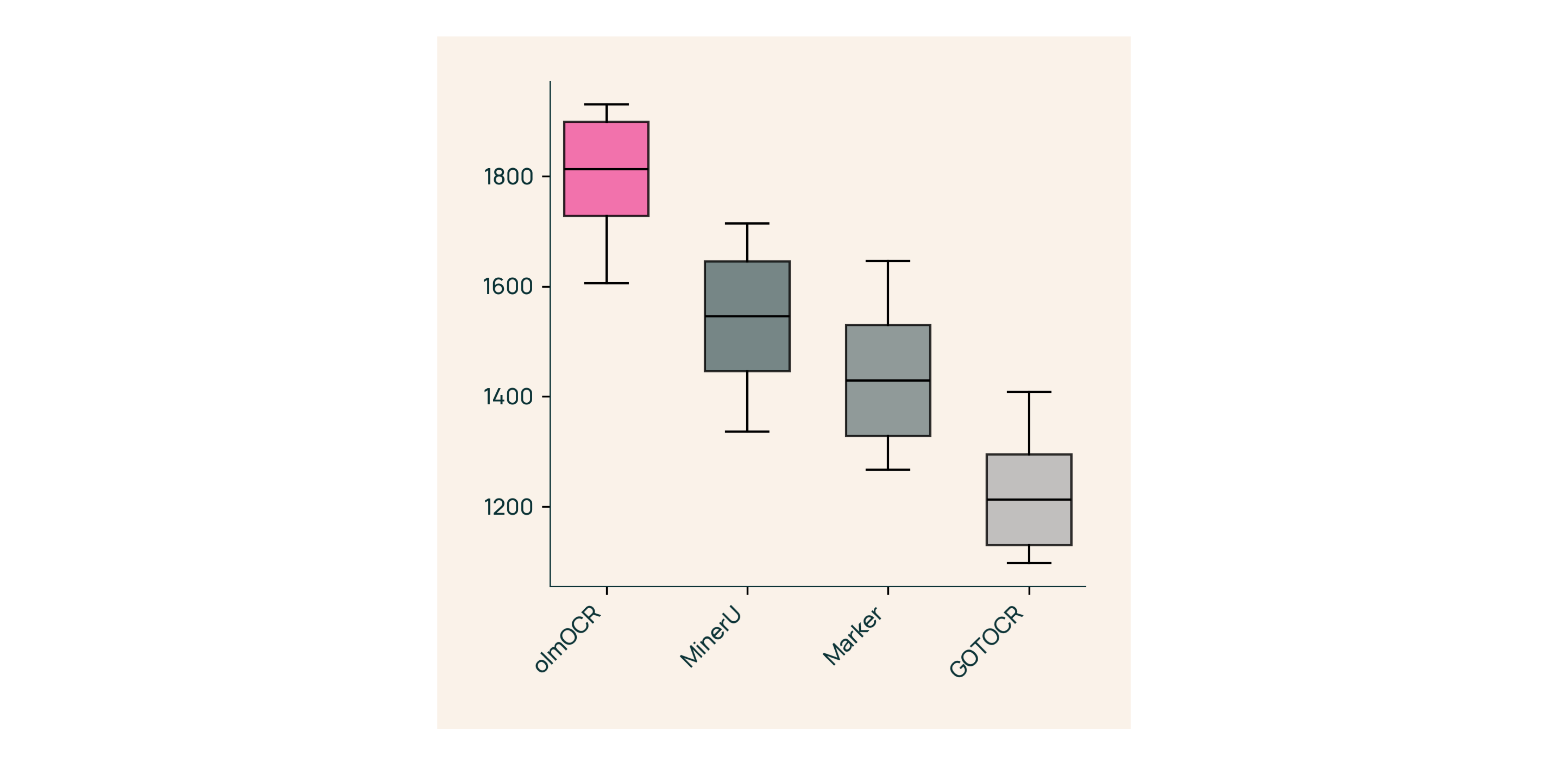

Human-Proven Superiority

In blind tests against Marker, GOT-OCR 2.0, and MinerU:- Wins 61% of comparisons

- Achieves ELO >1800 (Gold Standard)

Under the Hood: How We Built olmOCR

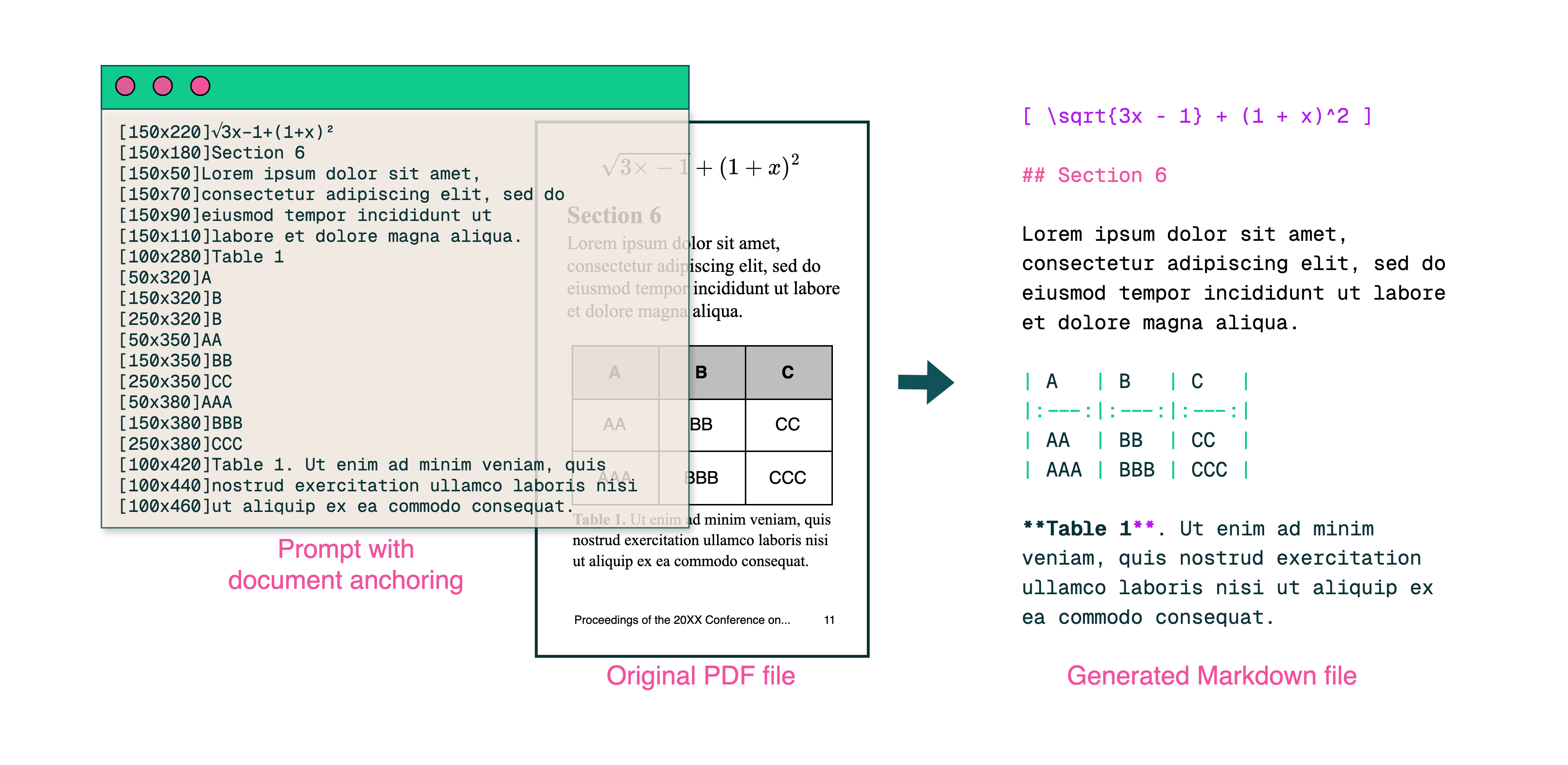

Document Anchoring: The Secret Sauce

Caption: Text + image context = accurate extraction.

We use PDFs’ own text/metadata to "anchor" VLMs during training:

- Extract text blocks & image regions

- Combine them in model prompts

- Let GPT-4o generate "gold standard" labels

Result? A model that understands both what text says and where it belongs.

Training for the Real World

- Dataset: 60% academic papers, 12% brochures, 11% legal docs

- Hardware: Optimized for NVIDIA GPUs, 90% lower energy use than comparable setups

- Fine-Tuning: Qwen2-VL-7B-Instruct adapted for document "conversations"

Try olmOCR in 3 Minutes

- Install

git clone https://github.com/allenai/olmocr && cd olmocr pip install -e . - Run on Sample PDF

python -m olmocr.pipeline ./demo_output --pdfs tests/gnarly_pdfs/horribleocr.pdf - Check the Markdown

Open./demo_output/horribleocr.md—see tables, equations, and text flow intact!

Final Take

olmOCR isn’t just another tool—it’s a paradigm shift. By marrying VLMs with transparent engineering, it makes high-quality text extraction accessible to everyone. Whether you’re building a research corpus or automating invoice processing, this toolkit belongs in your stack.

Next Steps

- ⭐ Star the GitHub repo

- 📊 Compare outputs using the Interactive Tool

- 💬 Join the discussion on Hugging Face

Let’s turn PDF pain into plain-text gain! 🚀